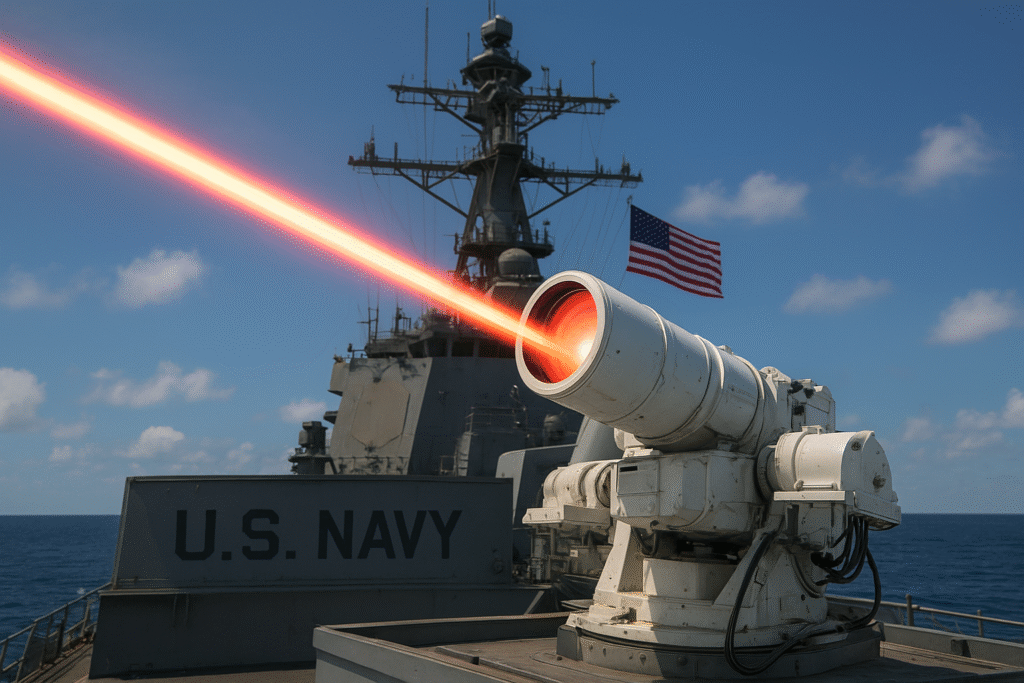

An effort made by the Naval Postgraduate School (NPS) enabled the US Navy to eliminate the need for humans to operate the laser weapons against drones, using AI to prevent swarm drone attacks. Basically, the USA is handing over the control of laser weapons to a player that never blinks, never tires, and calculates millions of responses per second, but should we trust it?

Why Laser Weapons?

Traditional weapons do not work well against swarm drone attacks because they are built to handle larger and slower targets, while swarm drone attacks contain small, fast moving, and often autonomous drones.

But wait! There’s a solution for swarm drone attacks, and it’s laser weapons. Where traditional weapons struggle with swarm drone attacks, laser weapons can hit drones at the speed of light. Laser weapons are more precise, and they have an unlimited magazine as long as there’s power.

Let’s think about what will happen if we combine laser weapons + Artificial Intelligence together and minimize human involvement, and increase Accuracy, Speed, and Reaction time.

Potential Benefits

There are several benefits of using AI in laser weapons. I will try to explain two of them:

Accuracy: Accuracy is the most important factor while countering attacks like swarm drone attacks. Humans can always make mistakes, but if the AI system is trained well, it will be insanely accurate.

Speed: Speed is another important factor in swarm attacks. The AI system can target drones at the speed of light and faster than any human. It can process tons of information at insane speed without blinking and tiring.

Does it go against AI Ethics?

Well! It depends. If the US Navy takes responsibility for using AI in its laser weapons and trains the AI Models well to distinguish between civilians’ and attackers’ drones, then it probably doesn’t go against AI Ethics.

But personally, I think human control is always necessary to prevent any accidents in the future because AI can always be inaccurate, and it may harm civilians’ drones.

My Perspective

In my opinion, building this type of AI system is a very smart move, especially when it’s built to target drones, not humans. It will protect Navy assets without putting sailors directly at risk. But again, as I mentioned before, we should not automate things fully; human intervention is very important, especially in matters of war.

Let me know what you think about it in the comments!