🔑 Key Takeaway

The role of ai in military involves using intelligent systems to enhance capabilities in surveillance, logistics, and autonomous operations, but it also introduces critical technical challenges in ensuring reliability and preventing algorithmic bias.

- Core Applications: AI is primarily used for processing vast amounts of data in Intelligence, Surveillance, and Reconnaissance (ISR), optimizing logistics, and enabling autonomous systems like drones and robots.

- Reliability is Crucial: Ensuring AI reliability is a major focus, centered on preventing “hallucinations” (confident errors) in high-stakes environments through robust testing and validation.

- Bias Mitigation is Essential: AI models can inherit and amplify human biases, making technical strategies for bias mitigation a key component of responsible AI development in defense.

- Ethical Frameworks: The U.S. DoD has established ethical principles (Responsible, Equitable, Traceable, Reliable, Governable) to guide the development and deployment of military AI.

Read on for a data scientist’s perspective on these technical and ethical challenges.

The role of ai in military applications involves leveraging intelligent systems to augment and accelerate capabilities in areas like surveillance, logistics, and autonomous operations. However, this integration also presents significant technical challenges, particularly in ensuring system reliability and fairness. For tech professionals, understanding these hurdles is key to developing trustworthy and effective systems that can perform under demanding conditions. This article provides a data scientist’s perspective on the technical obstacles of building robust military AI, from its core applications to the critical issues of reliability and algorithmic bias.

The pursuit of military AI is characterized by a dual nature—it holds immense potential to improve efficiency and safety, yet it also carries a profound responsibility to be implemented ethically and securely. This article will break down the technical frameworks for ensuring system reliability, explore strategies to mitigate inherent biases, and examine the ethical principles guiding development. By journeying from current applications to the core technical and ethical problems, we can better appreciate the complexities involved in this high-stakes domain of the military use of ai and ai ethics.

ℹ️ Transparency

This article explores the technical and ethical dimensions of AI in military contexts based on public research and official reports. Our goal is to inform tech professionals accurately and objectively.

Core Applications of AI in Modern Defense

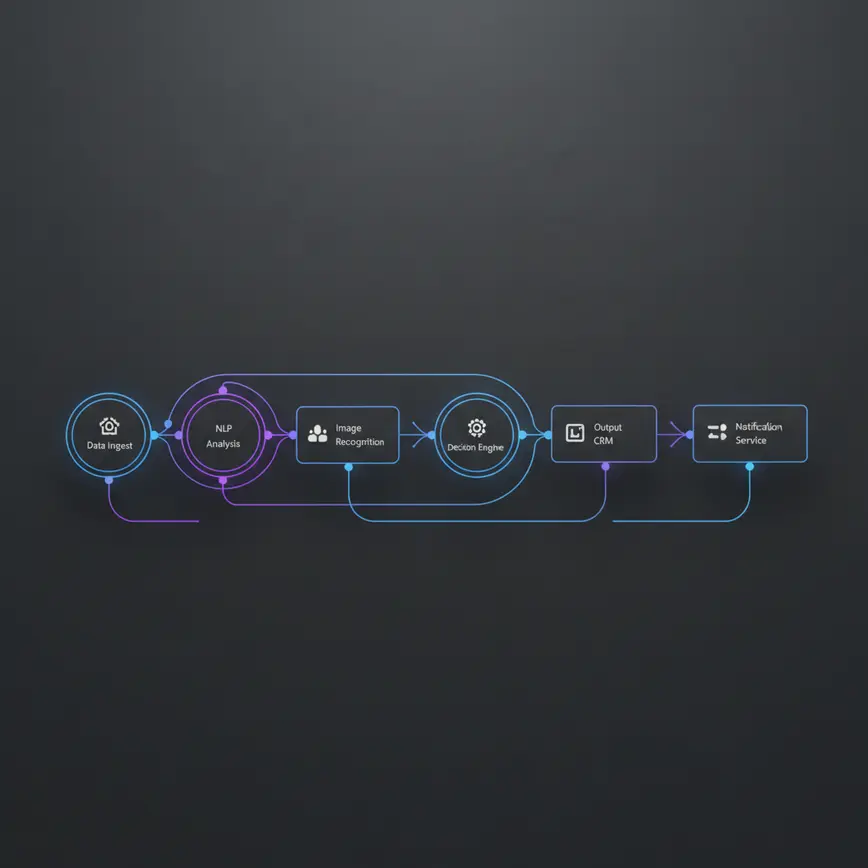

AI is currently used in modern defense to automate data analysis, enhance decision-making speed, and operate autonomous systems, fundamentally changing the nature of intelligence gathering and logistics. The primary driver behind this adoption is the overwhelming volume of data generated by sensors, which is often impossible for humans to analyze effectively in real-time. This has led to a range of military ai applications and expanded the use of ai in military operations. These applications can be broken down into three main areas:

Intelligence, Surveillance, and Reconnaissance (ISR)

AI algorithms are well-suited for processing massive datasets from drones, satellites, and various other sensors. The sheer scale of this data presents a significant challenge. For instance, a U.S. Air Force ISR study highlights this challenge, noting that a single wide-area motion imagery (WAMI) sensor can generate over intelligent systems are enabled by AI to navigate complex environments, identify objects of interest, and in some scenarios, make decisions with reduced human intervention. A 2019 report from the Center for a New American Security (CNAS) states that “AI-enabled autonomy is central to future concepts for intelligent swarms, loyal wingmen, and unmanned ground vehicles.”

Ensuring AI Reliability on the Battlefield

Ensuring ai reliability in military contexts means guaranteeing that a system performs its intended function accurately and consistently, especially under the unpredictable conditions of a battlefield, without producing dangerous errors. Unlike failures in commercial AI, which might lead to financial loss or inconvenience, failures in military AI can have lethal consequences. Therefore, reliability in this domain is not just about accuracy but also about robustness, predictability, and fostering trustworthy ai. A major focus of reliability engineering is tackling the problem of AI “hallucinations” and other forms of unpredictable behavior.

The Challenge of “Hallucinations” in High-Stakes Environments

AI “hallucinations” occur when a model generates confident but entirely false or nonsensical outputs. In a military setting, this could be catastrophic—for example, an AI system misidentifying a civilian vehicle as a hostile target. From a data science perspective, these errors often stem from the way models are trained. According to a 2025 OpenAI technical report, large language models hallucinate because their training regimens reward guessing over expressing uncertainty, and evaluation metrics often penalize abstention, reinforcing the tendency to generate plausible but incorrect information. This makes the mitigation of ai hallucinations a top priority.

Technical Frameworks for Robust and Trustworthy AI

Several technical approaches are being developed to build more reliable and robust ai. These frameworks are designed to stress-test systems and make their internal processes more transparent to human operators. Key concepts include:

- Red Teaming: This involves adversarially testing an AI system to proactively find weaknesses before it is deployed. Teams of experts intentionally try to trick or break the model to identify potential failure points.

- Explainable AI (XAI): This is a field focused on designing systems whose decision-making processes can be understood and audited by humans. If an AI recommends a course of action, an XAI system can show the operator the data and reasoning it used.

- Uncertainty Quantification: This involves building models that can accurately report their own confidence level. Instead of making a low-confidence guess, the system can flag its uncertainty and “say I don’t know,” prompting a human to review the situation.

Testing and Validation Protocols for Military-Grade AI

Military AI systems typically require more rigorous and comprehensive testing protocols than their commercial counterparts. High-fidelity simulations are a critical tool, allowing developers to test an AI’s performance across millions of virtual scenarios that would be too dangerous or expensive to replicate in the real world. Furthermore, reliability doesn’t end at deployment. Continuous monitoring and updating of models are essential to prevent “model drift,” a phenomenon where a model’s performance degrades over time as real-world conditions diverge from the data it was trained on.

Preventing AI Bias in Defense Systems

Preventing ai in military systems from exhibiting ai bias involves actively identifying and mitigating systematic errors in AI models that could lead to unfair or discriminatory outcomes, such as disproportionately targeting certain demographic groups. It is important to understand that bias is not a moral failing of the AI itself, but rather a technical flaw that often stems from the data and algorithms used to build it. In a military context, this flaw can have devastating consequences for civilian populations and undermine the legitimacy of operations, making the prevention of ai bias and discrimination a critical concern. Understanding where this bias comes from is the first step to fixing it.

Where Bias Originates: Data, Algorithms, and Human Oversight

The question of what is ai bias can be answered by looking at its primary sources. Bias in AI systems can be introduced at multiple stages of the development and deployment pipeline:

- Data Bias: This is one of the most common sources. If the data used to train a model is not representative of the real-world environment, the model’s performance will be skewed. For example, a facial recognition system trained mostly on images of one ethnicity will likely be less accurate when identifying individuals from other ethnic groups.

- Algorithmic Bias: Some algorithms can inadvertently create or amplify biases, even if the training data is balanced. This can happen through the way the algorithm learns to prioritize certain features or variables over others.

- Human Bias: Human operators can also introduce bias through their interpretation of or interaction with AI recommendations. If an operator has a pre-existing bias, they may be more likely to accept a flawed AI suggestion that confirms it.

Real-World Examples of AI Bias and Their Implications

Hypothetical and real-world ai bias examples help illustrate the potential consequences. Consider the following scenarios:

- Example 1: An object recognition system for a drone is trained on thousands of hours of footage of military vehicles in desert environments. When deployed in a snowy or dense urban landscape, it may fail to correctly identify similar vehicles because its training data was not diverse enough.

- Example 2: A threat assessment algorithm is built using historical data that reflects past patterns of surveillance. If that historical data contains racial bias in ai, the new system may learn to flag individuals from a certain region or demographic as higher risk, leading to discriminatory targeting and surveillance.

Technical Strategies for Bias Mitigation in AI Models

From a data science perspective, there are several technical strategies for ai bias mitigation. According to a seminal survey by Mehrabi et al., bias mitigation techniques are often categorized into three main approaches based on when they are applied in the machine learning pipeline:

- Pre-processing: These techniques are applied to the data before the model is trained. This could involve re-sampling the data to ensure all demographic groups are fairly represented or augmenting datasets to add more diversity.

- In-processing: These techniques are applied during the model’s training process. This might involve adding fairness constraints to the algorithm’s objective function, essentially telling the model to optimize for both accuracy and equity simultaneously.

- Post-processing: These techniques are applied to the model’s output after it has been trained. This could involve adjusting the decision thresholds for different groups to ensure that the outcomes are equitable, even if the model’s raw scores show some disparity.

The Ethical Framework of Military AI

The ethical framework for military AI, often referred to as ai ethics, centers on ensuring these powerful systems are used responsibly and in accordance with international law and moral principles, with a primary focus on maintaining human accountability. There is a robust global debate surrounding the ethics of ai in warfare and a significant push for clear guidelines to prevent an uncontrolled arms race in autonomous weapons. These guidelines often take the form of rules, policies, and best practices designed to govern AI development and deployment. A key flashpoint in this debate is the concept of Lethal Autonomous Weapons.

The Debate on Lethal Autonomous Weapons (LAWs)

Lethal Autonomous Weapons (LAWs) are a class of ai-enabled weapon system that can independently search for, identify, target, and kill human beings without direct human control. The development of such systems raises profound ethical questions. The International Committee of the Red Cross (ICRC) outlines key ethical challenges, including the dehumanization of targets, potential erosion of human moral judgment, and algorithmic bias. In a 2024 analysis, the ICRC asserts that such systems “should not be granted the power to decide who should live and die.” This perspective is central to the ongoing international conversation about the future of ai warfare.

Examining the DoD’s Ethical AI Principles

In response to these challenges, the U.S. Department of Defense has adopted a formal set of principles to guide the responsible development and use of AI. In 2020, the U.S. Department of Defense formally adopted five ethical principles for AI: Responsible, Equitable, Traceable, Reliable, and Governable, to guide the design, development, and use of AI capabilities. These dod ai ethics principles serve as a foundational framework for developers and policymakers:

- Responsible: Humans should exercise appropriate levels of judgment and remain responsible for the development, deployment, and outcomes of AI systems.

- Equitable: The department will take deliberate steps to minimize unintended bias in AI capabilities.

- Traceable: AI systems should be sufficiently understandable to technical experts, possess transparent methodologies, and be auditable.

- Reliable: AI systems should have an explicit, well-defined domain of use, and their safety and robustness should be tested across that domain.

- Governable: AI systems should be designed and engineered to fulfill their intended function while possessing the ability to detect and avoid unintended consequences, and the capacity to be disengaged if they demonstrate unintended behavior.

FAQ – Answering Key Questions About AI in Military

How is AI used in the military?

AI is primarily used in the military to process vast amounts of data, enhance decision-making, and operate autonomous systems. Key applications include analyzing intelligence from drones and satellites (ISR), optimizing supply chains and predicting equipment failures (logistics), and powering unmanned vehicles for reconnaissance or transport. The goal is to increase speed, efficiency, and safety for human personnel.

What is AI bias?

AI bias refers to systematic errors in an AI system’s output that result in unfair or discriminatory outcomes. It is not a moral failing of the machine but a technical flaw that typically originates from incomplete or unrepresentative training data. For example, a facial recognition system trained mostly on one demographic may be less accurate for others. Mitigating this bias is a critical part of responsible AI development.

What is AI ethics?

AI ethics is a branch of ethics that studies the moral and social impact of artificial intelligence systems. It aims to create principles and guidelines for the responsible design, development, and use of AI. Key topics include fairness and bias, accountability for AI decisions, transparency in algorithms, and the impact on human rights. In military contexts, it focuses heavily on ensuring meaningful human control over lethal force.

Does the military use AI?

Yes, militaries around the world, including the U.S. military, actively use AI in a wide range of non-lethal and lethal applications. It is integrated into systems for intelligence analysis, logistics, predictive maintenance, surveillance, navigation, and cyber defense. The use of AI is considered a critical component of modernizing defense capabilities, though its application in autonomous weapons remains a subject of intense international debate.

What are the disadvantages of AI in warfare?

The main disadvantages of AI in warfare include the risk of technical failures, the potential for algorithmic bias, and the danger of escalating conflicts. AI systems can make catastrophic errors (“hallucinations”), perpetuate discrimination if trained on biased data, and accelerate decision-making to a speed that humans cannot manage, increasing the risk of accidental war. Furthermore, there are significant ethical concerns about delegating life-or-death decisions to machines.

Should AI be used in warfare?

The question of whether AI should be used in warfare is a complex ethical debate with no simple consensus. Proponents argue it can increase precision and reduce risk to soldiers. Opponents warn of the dangers of autonomous weapons, algorithmic errors, and the moral implications of removing human judgment from lethal decisions. Most policy discussions focus on establishing strict guidelines and ensuring “meaningful human control” rather than an outright ban on all military AI.

Limitations, Alternatives, and Professional Guidance

Research Limitations

It is important to acknowledge that much of the performance data on specific military AI systems is classified. As a result, public analysis often relies on official reports, academic studies, and assessments from think tanks. The field of AI is also evolving with incredible speed, meaning the capabilities and limitations of systems are constantly changing. Furthermore, research into the long-term implications of widespread military AI on strategic stability is ongoing and remains a subject of considerable debate among experts in the ai in defense industry.

Alternative Approaches

As an alternative to fully autonomous systems, many current applications focus on a “human-in-the-loop” framework. In this model, the AI provides analysis and recommendations, but a human operator makes the final, critical decision. Another approach is the “human-on-the-loop” system, where an AI is permitted to act autonomously within a pre-defined scope, but a human supervisor can intervene and override its actions at any time. These approaches are designed to leverage the speed and data-processing power of AI while preserving human judgment and accountability, especially in high-stakes situations.

Professional Consultation

Organizations involved in developing or deploying AI in sensitive domains are often advised to consult with multidisciplinary experts. A purely technical approach may be insufficient to address the complex challenges posed by military AI. This consultation should include not only data scientists and engineers but also ethicists, legal scholars specializing in international humanitarian law, and military strategists to ensure that systems are developed and used in a responsible and effective manner.

Conclusion

The role of ai in military contexts presents a dual reality: it is a powerful tool for enhancing capabilities, but it also carries profound technical and ethical responsibilities. From a data science perspective, the core challenges of ensuring ai reliability and preventing ai bias are paramount. Building systems that are robust, transparent, and fair is the critical path forward for any responsible application in this domain. It is important to recognize that the performance of these systems can vary, and outcomes are subject to the quality of their design and oversight.

For professionals seeking to understand the practical challenges and solutions in building effective AI, exploring these topics is essential. The principles of reliability, fairness, and governability discussed here are not limited to the military but are central to the successful application of AI in any high-stakes field. To discover how these concepts are applied in other areas, explore more deep dives into applied AI. For more deep dives into applied AI, subscribe to Hussam’s AI Blog.

References

- U.S. Department of Defense. (2020). Recommendation to the Secretary of Defense: DoD Ethical Principles for Artificial Intelligence. Retrieved from https://media.defense.gov/2021/May/27/2002730593/-1/-1/0/IMPLEMENTING-RESPONSIBLE-ARTIFICIAL-INTELLIGENCE-IN-THE-DEPARTMENT-OF-DEFENSE.PDF

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2019). A Survey on Bias and Fairness in Machine Learning. arXiv preprint arXiv:1910.03586. Retrieved from https://arxiv.org/abs/1910.03586

- OpenAI. (2025). Why Language Models Hallucinate. Retrieved from https://cdn.openai.com/pdf/d04913be-3f6f-4d2b-b283-ff432ef4aaa5/why-language-models-hallucinate.pdf

- Cook, M. (2021). The Future of Artificial Intelligence in ISR Operations. Air & Space Power Journal. Retrieved from https://www.airuniversity.af.edu/Portals/10/ASPJ/journals/Volume-35SpecialIssue/F-Cook.pdf

- Horowitz, M. C. (2019). Strategic Competition in an Era of AI. Center for a New American Security. Retrieved from https://www.cnas.org/publications/reports/strategic-competition-in-an-era-of-ai

- International Committee of the Red Cross. (2024). The road less travelled: Ethics in the international regulatory debate on autonomous weapon systems. ICRC Law & Policy Blog. Retrieved from https://blogs.icrc.org/law-and-policy/2024/04/25/the-road-less-travelled-ethics-in-the-international-regulatory-debate-on-autonomous-weapon-systems/